100% Hallucination-Free AI

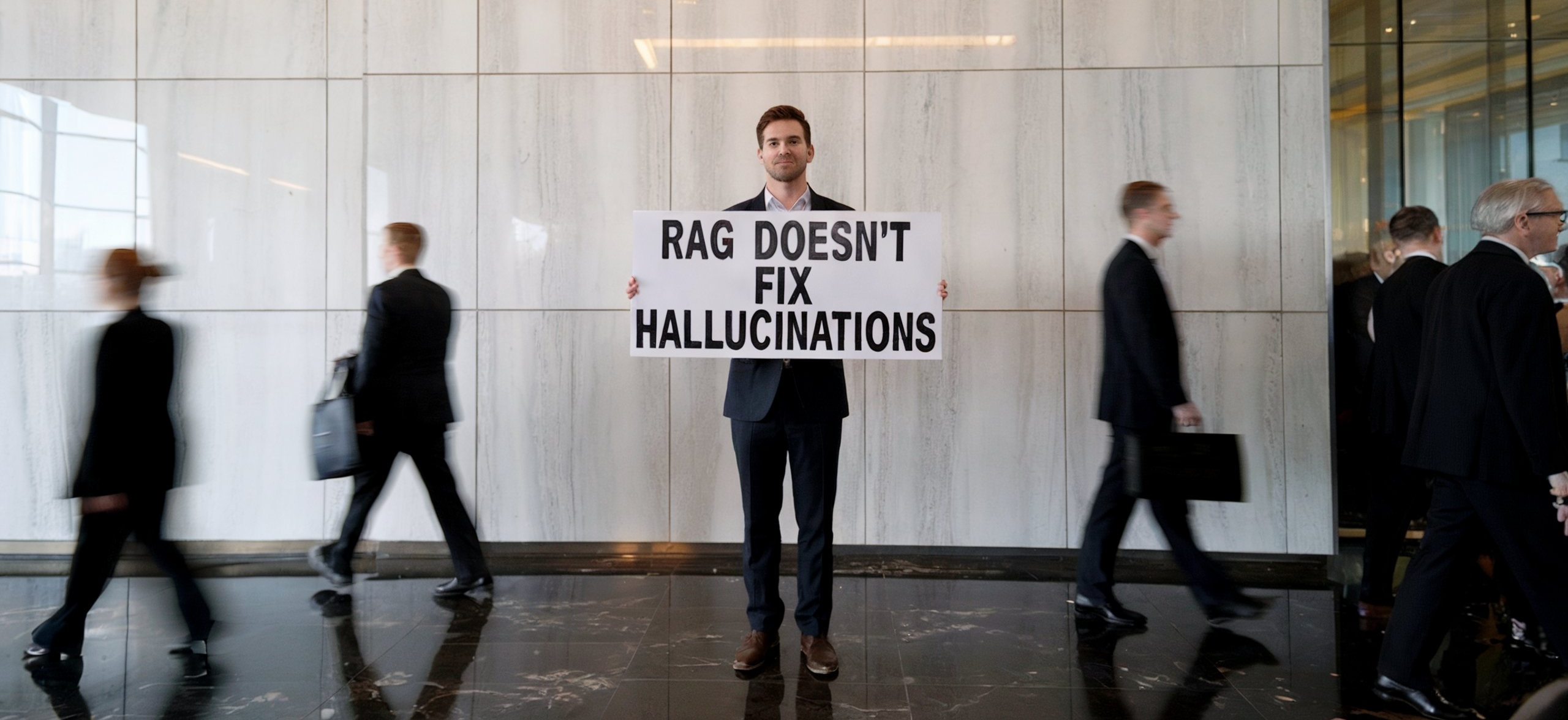

Hallucinations in large language models (LLMs) remains a critical barrier to the adoption of AI in enterprise and other high-stakes applications.

Despite advancements in RAG, state-of-the-art methods fail to achieve more than 80% accuracy, even when provided with relevant and accurate context.

Acurai introduces a groundbreaking solution that eliminates 100% of hallucinations in LLM-generated outputs.

The Problem: Why Hallucinations Persist

Even with state-of-the-art methods like retrieval-augmented generation (RAG), current AI systems fall short. Despite providing LLMs with accurate context, these systems can achieve only up to 80% accuracy on key benchmarks. This gap underscores a critical challenge: ensuring faithfulness and correctness in AI outputs.

Key Challenges:

-

Zero-shot hallucinations: Models "guess" answers without domain-specific context, often fabricating convincing but incorrect responses.

-

Misinformation risks: Even authoritative-sounding outputs can mislead users when they are factually incorrect.

-

RAG limitations: As practioners are well aware of, despite augmenting LLMs with external databases, RAG pipelines cannot guarantee hallucination-free outputs.

The Solution: Acurai's Novel Approach

Acurai redefines what's possible with LLMs. By leveraging a deep understanding of internal representations, noun-phrase dominance, and discrete functional units (DFUs), Acurai ensures that models generate factually correct and faithful responses every time.

Key Innovations:

-

1.Systematic Input Reformatting: Queries and context are restructured to ensure the model's comprehension.

-

2.Noun-Phrase Dominance Model: This method aligns model processing with critical linguistic elements, ensuring clarity and accuracy.

-

3.Validation with RAGTruth: In extensive testing with the RAGTruth corpus, Acurai achieved a historic milestone—100% hallucination-free responses across GPT-4 and GPT-3.5 Turbo.

Breakthrough Results

RAGTruth Corpus

Partner with us

If you have a need for hallucination-free AI, please fill out the short form below.

As you might imagine, we currently have a waiting list. We raised a $2M pre-seed round in October 2024, and aim to be fully production-ready by the summer of 2025.

In the meantime, we would be very interested in hearing about your project and the problems you are experiencing with hallucinations, as your project may dovetail with our own early-access development timelines.

Also, we will add you to our newsletter list & send updates as we more publish papers, etc.

Welcome to the revolution!

Michael & Adam

Co-Founders, Acurai